After watching Safiya Noble’s TED talk on racist algorithms, I knew I wanted to do a remix video that showed this bias in facial recognition software, demanded attention towards the issue and called for the hiring of more coders of color. I also read several articles on the topic, including “How White Engineers Built Racist Code,” “Facial-Recognition Software Suffers From Racial Bias, U.S. Study Finds,” and a New York Times op-ed, “The Racist History behind Facial Recognition.” With Noble’s TED talk and all these articles, I had a strong grasp on how and why facial recognition came to be and the ways in which it disproportionately and negatively affected black/African Americans and people of color.

I wanted my story arc to first show white people’s fascination with the software, so I found clips of reporters talking about facial recognition excitedly and explaining how it was going to help police officers track down criminals. I ended this portion of the narrative with a clip of a reporter saying that “the possible applications [of facial recognition software] are endless,” as I thought that perfectly summed up people’s ignorance about it.

I then wanted to juxtapose that with people of color talking about facial recognition and the negative effects that it’s having on their communities. I found clips of people being interviewed about the software and speaking on how the code was racist as it was dependent on the fact that black/African Americans were more likely to show up in the database as they’re targeted by police more in the first place. Since they’re more likely to be arrested, the algorithms will show that they “match” the criminal input police put into machines.

I also added a clip of Alexandria Ocasio-Cortez and Joy Adowaa Buolamwini–a computer scientist and digital activist who’s been outspoken about racist algorithms–in a hearing about the software to end this portion of my story arc. AOC asks Buolamwini who was making the software and who the software is most effective on and Buolamwini answers “white men” to both. I thought this would be a good closing clip as it segways into the next part of my narrative, which is about how the majority of coders that are hired are white men.

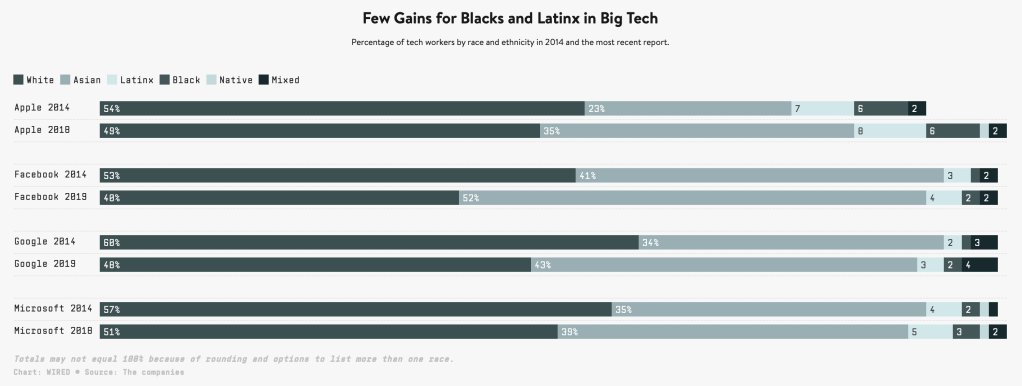

The final part of my story arc started with a clip of stats that showed how in 2014, black people made up 1%-6% of the workforce in four of the biggest tech companies and how only 1% of entrepreneurs who received venture capital were black. I had a couple other clips calling for the need of more black coders.

Lastly, for the ending of my video and the call to action, I used clips I had earlier in my video about how black people were disproportionately affected by the software and placed the “possible applications are endless” clip after each one to emphasize that there is injustice taking place through code. I hoped that the “possible applications are endless” clip would be more tongue in cheek at the end of the video after all the stats and info about the harmful effects coding had on POC.

Then, the very last clip was of Bozoma Saint John, a black businessman who was Uber’s Chief Brand Officer at the time. John spoke on the need of black people in tech, and I used a clip of her saying that we needed to “open the doors” for them and that we simply just needed “more” POC in tech and coding. I replayed this clip a couple times for emphasis and to juxtapose the “possible applications are endless” clip and then added text that said “OPEN THE DOORS. My ending card said that “WE NEED MORE CODERS OF COLOR.”

Here is a list of my sources:

https://www.nytimes.com/2019/07/10/opinion/facial-recognition-race.html

https://www.wired.com/story/five-years-tech-diversity-reports-little-progress/

Here are hyperlinks to the videos I used in my remix video:

Uber Exec: We Need More Black Women in Tech

Alexandria Ocasio-Cortez Exposes Dangers of Facial Recognition | NowThis

Is A.I. Racist? Police Under Fire For Controversial Tech Tools | The Beat With Ari Melber | MSNBC

Is Facial Recognition Software Racist? | The Daily Show

Police are using Amazon’s facial recognition technology. Privacy experts are worried.

How police manipulate facial recognition

How police are using facial recognition on civilians | The Weekly with Wendy Mesley

North Texas Police Using Facial Recognition And There Are Growing Privacy Concerns

New Facial Recognition Software for Police Targets African Americans

Is Facial Recognition Racist? Yes. And Detroit Is Using It.

Why police use of facial recognition technology raises privacy fears